In this chapter the face recognition program gets expanded. The face recognition was already discussed in another chapter. In this chapter the full code is shown in red color. These lines of code are responsible for sending a message to the IoT hub:

# python pi_face_recognition.py --cascade haarcascade_frontalface_default.xml --encodings encodings.pickle # import the necessary packages from imutils.video import VideoStream from imutils.video import FPS import face_recognition import argparse import imutils import pickle import time import cv2 import sys import random # Using the Python Device SDK for IoT Hub: # https://github.com/Azure/azure-iot-sdk-python # The sample connects to a device-specific MQTT endpoint on your IoT hub. import iothub_client from iothub_client import IoTHubClient, IoTHubClientError, IoTHubTransportProvider, IoTHubClientResult from iothub_client import IoTHubMessage, IoTHubMessageDispositionResult, IoTHubError, DeviceMethodReturnValue ### imports finished ### # construct the argument and parse the arguments ap = argparse.ArgumentParser () ap.add_argument ("- c", "--cascade", required = True, help = "path to where the face cascade resides") ap.add_argument ("- e", "--encodings", required = True, help = "path to serialized db of facial encodings") args = vars (ap.parse_args ()) # The device connection string to authenticate the device with your IoT hub. # Using the Azure CLI: # az iot hub device-identity show-connection-string --hub-name {YourIoTHubName} --device-id MyNodeDevice --output table CONNECTION_STRING = "HostName = TeamProject.azure-devices.net; DeviceId = RaspberryPi; SharedAccessKey = Wg + ELbLF5 / mwS2mWWmBEl0x0NoGK5bCF9 + KloXcQrZo =" # Using the MQTT protocol. PROTOCOL = IoTHubTransportProvider.MQTT MESSAGE_TIMEOUT = 10000 # Define the JSON default message to send to IoT Hub. MSG_TXT = "You should not see this" #Functions for sending the message def_confirmation_callback (message, result, user_context): print ("IoT Hub responded to message with status:% s"% (result)) def iothub_client_init (): # Create to IoT Hub client client = IoTHubClient (CONNECTION_STRING, PROTOCOL) return client def iothub_client_telemetry_sample_run (): try: client = iothub_client_init () # Build the message with simulated telemetry values. message = IoTHubMessage (MSG_TXT) # Add a custom application property to the message. # An IoT hub can filter these properties without accessing the message body. # prop_map = message.properties () # if temperature> 30: # prop_map.add ("temperatureAlert", "true") #else: # prop_map.add ("temperatureAlert", "false") # Send the message. print ("Sending message:% s"% message.get_string ()) client.send_event_async (message, send_confirmation_callback, None) time.sleep (1) except IoTHubError as iothub_error: print ("Unexpected error% s from IoTHub"% iothub_error) return ### End functions for sending message ### # load the known faces and embeddings along with OpenCV's Haircascade #for face detection print ("[INFO] loading encodings + face detector ...") data = pickle.loads (open (args ["encodings"], "rb"). read ()) detector = cv2.CascadeClassifier (args ["cascade"]) #initialize the video stream and allow the camera to warm up print ("[INFO] starting video stream ...") vs = VideoStream (src = 0) .start () # vs = VideoStream (usePiCamera = True) .start () time.sleep (2.0) # start the FPS counter fps = FPS (). start () # loop over frames from the video file stream while true: # grab the frame from the threaded video stream and resize it # to 500px (to speedup processing) frame = vs.read () frame = imutils.resize (frame, width = 500) # convert the input frame from (1) BGR to grayscale (for face # detection) and (2) from BGR to RGB (for face recognition) gray = cv2.cvtColor (frame, cv2.COLOR_BGR2GRAY) rgb = cv2.cvtColor (frame, cv2.COLOR_BGR2RGB) # detect faces in the grayscale frame rects = detector.detectMultiScale (gray, scaleFactor = 1.1, minNeighbors = 5, minSize = (30, 30), flags = cv2.CASCADE_SCALE_IMAGE) # OpenCV returns bounding box coordinates in (x, y, w, h) order # but we need them in (top, right, bottom, left) order, so we # need to do a bit of reordering boxes = [(y, x + w, y + h, x) for (x, y, w, h) in rects] #compute the facial embeddings for each face bounding box encodings = face_recognition.face_encodings (rgb, boxes) names = [] # loop over the facial embeddings for encoding in encodings: # attempt to match each face in the image image to our known # encodings matches = face_recognition.compare_faces (data ["encodings"]), encoding) name = "Unknown" # check to see if we have found a match if true in matches: # find the indexes of all matched faces then initialize a # dictionary to count the total number of times each face # what matched matchedIdxs = [i for (i, b) in enumerate (matches) if b] counts = {} # loop over the matched indexes and maintain a count for # each recognized face face for i in matchedIdxs: name = data ["names"] [i] counts [name] = counts.get (name, 0) + 1 # determine the recognized face with the largest number # of votes (note: in the event of an unlikely tie Python # wants to select first entry in the dictionary) name = max (counts, key = counts.get) # Define the JSON message to send to IoT Hub. MSG_TXT = "% s detected"% (name) # update the list of names names.append (name) # loop over the recognized faces for ((top, right, bottom, left), name) in zip (boxes, names): # draw the predicted face name on the image cv2.rectangle (frame, (left, top), (right, bottom), (0, 255, 0), 2) y = top - 15 if top - 15> 15 else top + 15 cv2.putText (frame, name, (left, y), cv2.FONT_HERSHEY_SIMPLEX, 0.75, (0, 255, 0), 2) #send message to IOT HUB iothub_client_telemetry_sample_run () # display the image to our screen cv2.imshow ("Frame", frame) key = cv2.waitKey (1) & 0xFF # if the `q` key was pressed, break from the loop if key == ord ("q"): break # update the FPS counter fps.update () # stop the timer and display FPS information fps.stop () print ("[INFO] elasped time: {: .2f}". format (fps.elapsed ())) print ("[INFO] approx. FPS: {: .2f}". format (fps.fps ())) #do a bit of cleanup cv2.destroyAllWindows () vs.stop ()

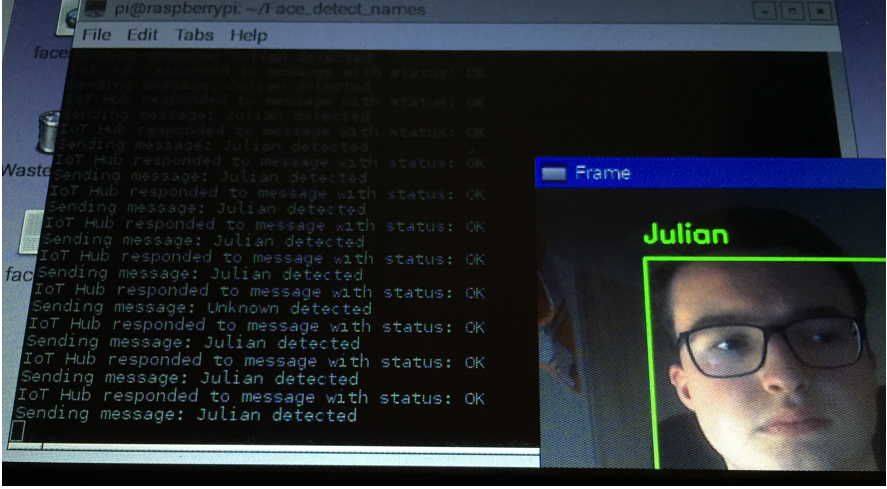

This code creates a connection to the IoT hub via the connection string, using the device in my IoT Hub which is named „RaspberryPi“. Whenever a face is recognized a message is sent to the IoT hub attached to a storage container. The IoT hub then sends back an acknowledgment whether or not the status of the message is OK.

In the picture above you can see that a message is sent to the IoT hub whenever a face is detected. The message contains the name of the person detected.

IMPORTANT:

Here it is important to note that the messaging only works if the azure SDKs are installed. Another known problem is that the connection to the IoT hub only works if iothub_client.so file is in the same folder where the face recognition python file is. This iothub_client.so file can be located in the folder azure-iot-sdk-python\c\cmake\iotsdk_linux\python\src.

Because of that error I put the azure-iot-sdk-python folder in the same folder where the face recognition is saved. I also copied the iothub_client.so file and put it in there. After these changes the code worked.

Final Raspberry Pi face recognition with IoT Hub message

The zip file above is the final version of the program. In the folder is already the iothub_client.so file. The whole azure-iot-sdk-python folder was too big to upload.

In the folder is therefore a command file which shows the command to start the program.